For over a decade, Insight has invested in artificial intelligence (AI) applications and the infrastructure underlying AI/ML development and deployment. The firm’s investments have spanned the ecosystem — from leading applied AI companies like dental AI platform Overjet, AI-driven lending software Zest AI, AI-powered care coordination Viz.ai, to generative AI like content writing tools like Jasper and Writer, data generation/mimicking tool Tonic, human avatar generator Hour One, entertainment content localization tool Deepdub, and AI infrastructure Run:AI, Fiddler, and Weights & Biases.

Large language models (LLMs) are on the precipice of accelerating fundamental disruption at both the application and infrastructure levels. It has the power to transform nearly every industry and business.

Read: 8 Tech Investors Share Predictions for 2023

The recent breakthroughs of transformers and few-shot models in unsupervised learning have allowed model parameters and corresponding accuracy to grow exponentially without degradation in loss function. For the first time, AI can create. This is a market inflection perhaps profoundly bigger than the rise of the internet, mobile, or the public cloud. We are in the early innings of the next generational shift.

AI as the enabler of transformative software

That said, the rising attention on generative AI and its seemingly limitless potential and applicability means it’s important to consider where in the stack value will ultimately accrue. The rise of the public cloud drove a platform shift, but the public cloud was an enabler, not a product itself. Like the public cloud, generative AI, and AI more broadly, is an enabler of transformative software, especially at the application layer. Some of the most exciting opportunities will emerge where AI is a more efficient and effective way to solve a core business problem and drive durable value long-term.

A step change in AI capabilities

Before 2017, AI was mostly about prediction tasks like classification or recommendation. With the release of Attention is All You Need, the world was introduced to a new form of neural network called the transformer model. Transformer models are created with significantly larger datasets, enabling them to make more accurate predictions and, importantly, enabling them to create.

Over the last five years, we have seen three major developments democratizing access to these models and enabling more complex use cases:

- New and free-to-use LLMs have emerged, e.g., OpenAI making GPT-3 open-source to developers.

- Computing costs have become cheaper.

- Innovations in MLOps technologies and infrastructure have accelerated.

As a result, developers have the infrastructure to build new, disruptive applications on top of cutting-edge, open-source LLMs.

The rise of GPT-3 and public foundational models

Some of the most important publicly-released models in the past two years include GPT-3 (and the recently released GPT-3.5 family), DALL-E, and Stable Diffusion. These foundational models are among the current super enablers for new AI as they enable text and image generation. ChatGPT, in particular, stands out as producing remarkably accurate and detailed human-like text that is finding its way into a plethora of everyday use cases. The recent launch of GPT-4 will take GPT-3’s ability to contextualize and fill in language using NLP to a new level.

New large language model capabilities, combined with higher data quality and availability and declining computing costs, create favorable market conditions for the rise of applications that will fundamentally change how we work. Many existing applications will also be retrofitted with generative AI superpowers. And we are excited to see companies emerge that solve the gaps in the infrastructure stack needed to support this growing suite of applications.

The evolving generative AI stack

Generative AI has been picking up steam in VC and tech circles – but where will durable value accrue? Here’s how Insight believes the generative AI stack will evolve and where we see the most immediate and exciting investment opportunities.

Foundation models

Generative AI models are emerging along a wide range of data types, including text, images, audio, and video. Some examples of popular generative model techniques and the types of data they can generate include GANs, VAEs, Flow-based models, and language models. While applications based on LLMs have captured our imagination and demonstrated the rapid progress of AI, this is just the start. We think of foundational models as more than LLMs.

New types of generative models will likely continue to be developed as research in this area advances. The foundation models are progressing at a rapid pace and are already often as good or better than the human generation of content.

Domain models

As data becomes king and more foundation models proliferate, the demand for AI-first applications will drive the emergence of many highly specialized domain or vertical-specific models. The models may chain foundation models or be trained in specific data or curated styles and tailored for specific industries (e.g., e-commerce, insurance, logistics). Some of these models may become Model as a Service or will likely become full-stack offerings with applications and tooling that sit on top.

Tools

We believe tooling will be a critical layer in the value chain, with different types of tools arising across many dimensions. While some infrastructure needs will become more acute with the rise of LLMs (e.g., the growth in image and video data, higher query and data volume), in other cases new gaps in the stack or net new problems will emerge entirely. Included below are four key segments where we believe new infrastructure tools will evolve:

- Playgrounds: allow non-technical individuals to interact with and explore the capabilities of foundation or expert models; this will typically enable prosumer use cases.

- Programming Frameworks: streamline and automate AI-specific workflow needs to access and build applications on top of LLMs (i.e., enable the programmability of models). Key workflow needs include but are not limited to state management, instrumentation, and chaining).

- Model Lifecycle: support the training, deployment, and performance management of models relying on complex, unstructured data (e.g., MLOPs stack for unstructured data as laid out in our Scale Up presentation here).

- Management & Safety: manage the safety, compliance, and security concerns and requirements surrounding LLMs.

Applications

The next generation of software applications will emerge with AI as a first-class citizen. While data-driven applications will enable a more personalized and improved customer experience, like successful traditional software, AI-first applications must solve an acute need and have attributes that suggest long-term durability (e.g., being embedded within a workflow, access to proprietary data, network effects, etc.).

Investor POV: What we’re excited about

Tools

The rise of foundational models, specifically LLMs exposes many unserved and underserved gaps in the current infrastructure stack. New tools will need to emerge for developers, data scientists, and non-technical users to leverage LLMs within an enterprise. Hence, we are excited about products that improve the accessibility and usability of large language models. There are numerous challenges for new infrastructure technologies to address, including the raw, unstructured quality of image and video data, the static nature of existing LLM interfaces, the fragmentation of different models (which we believe will only continue as models become increasingly industry-specific), or the need for effective governance to ensure unbiased, responsible results.

Companies are already beginning to develop new frameworks that re-imagine prompt engineering used in the design and deployment of LLM apps and enable LLM applications to be built through composability. Model lifecycle management solutions will take on even greater importance as LLM creation, deployment, and collaboration become more complex. Emerging solutions in the management and safety of datasets will construct important guardrails and processes against bias in line with new regulations and expectations in the ethical uses of AI.

We are excited about tooling platforms that make the lives of the “prompt engineer” or LLM-focused data scientist easier. They will form the backbone of next-gen model creation, deployment, and orchestration. The MLOps toolchain will continue to thrive as LLMs continue to multiply. For instance, Weights & Biases is a significant beneficiary as the hyperparameter tuning and version control solution for most LLM builders.

Horizontal applications

We believe every function in an organization with repetitive and/or skill-based work will be reshaped by foundation models, whether it is the democratization of coding, generating sales, supporting customers with virtual agents, or creating content for design and marketing. Just follow the Jasper.ai story to understand how disruptive a new entrant can be. That said, some of these functions will be reshaped more effectively by agile and innovative incumbents. Identifying where large language models are better suited to enable a better feature instead of a standalone platform will be critical when investing in this space. In addition to this, it will be important to find problems that require solutions deeply embedded into business workflows and access to unique data sets.

Two areas where we believe new standalone platforms will arise are developer productivity and security.

Developer productivity: We see significant opportunities in the creation of coding automation tools that accelerate the delivery, development, and testing of software code. Developers spend hours per day on debugging and test/build cycles which computer-generated code and code-review tools can significantly reduce. An estimated $61B can be saved per year from these tools in reduced inefficiencies and costs for software development. Moreover, democratized access to product and tech development for non-coders holds the potential to open the doors of entrepreneurship and software development for non-developers, sparking innovation and value creation in the economy.

Security: One of the most exciting applications of generative AI to security comes in auto-remediations where computers can predict and effectively address security vulnerabilities. Moreover, AI/ML models already power fraud detection for major software and fintech companies – however, the lack of quality data is a major issue. With generative AI, businesses can create more complex and widespread fraud simulations using synthetic data (i.e. fake photographs, fake PII) that solve for the lack of real-world data and enable more accurate detection rates.

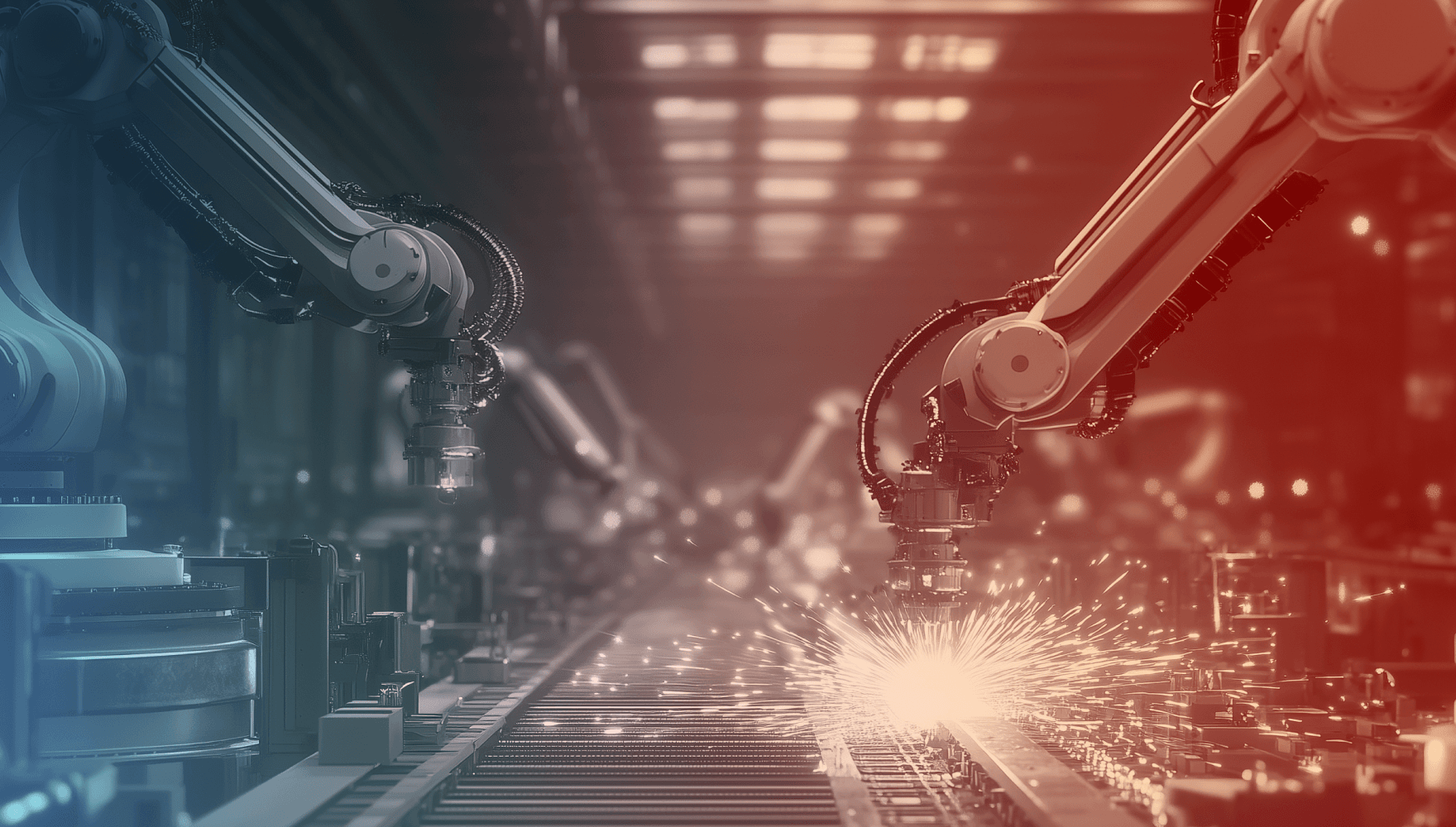

Vertical Applications

Vertical-specific applications present some of the most significant opportunities for durable company creation, given the existence of proprietary data sets, tailored GTM motions, and the ability to embed deep into business workflows. We have been excited about sizable verticals with large and complex data that is mission-critical to businesses, in many cases combined with labor shortages and regulatory or compliance requirements.

Two examples of industries where exciting developments have emerged and where there continues to be significant headroom for innovation are life sciences and supply chain and logistics.

Life sciences: Generative AI offers many interesting use cases, from synthetic data creation for clinical trials to generative protein designs based on protein folding models to accelerate drug discovery to research summarization of academic papers. While adoption is in the early innings, the potential to accelerate drug discovery and approvals, improve patient outcomes and deliver cost savings in healthcare is massive. Generative AI applications building in life sciences will have access to critical and hard-to-access clinical and patient datasets which create barriers to entry and enable better results; as more patient data becomes accessible -> models become better -> results and outputs become better -> flywheel starts again.

Supply chain and logistics: 3D modeling and blueprint generation offer promising methods to automate product development, including design and component substitutions, leading to new products at lower costs with higher performance and sustainability. Generative AI will also enable document automation and contract generation to allow for better sales and negotiation insights and streamline workflows.

Questions for founders

Insight is excited to partner with the next generation of AI founders from the earliest stages. And we believe that long-term category leadership will come from a few key ingredients: problem, data, and product. Companies must solve a problem with a clear and material ROI, where there is a tangible data flywheel and where the product is highly embedded into the customer’s day-to-day operations, creating material customer stickiness.

Here are some key questions Insight investors ask when evaluating a startup. We hope these are helpful for founders beginning to ideate in the space.

- Value Proposition/ROI: Is there a quantifiable ROI with quick time to value being delivered?

- Product: Is the product deeply integrated into the customer’s day-to-day operations, and so valuable to the customer that it is very difficult to rip out?

- Data Flywheel: Does your product rely on proprietary data and benefit from a data flywheel? E.g., flywheel between user engagement, data collection, and model performance → more engagement = more data = better models = more users and revenue.

- Incumbent & Other Threats: Is there a flexible and agile incumbent that can easily launch this product as a feature?

—

Disclaimer: All securities investments risk the loss of capital. Certain statements made throughout this post that are not historical facts may contain forward-looking statements. Any such forward-looking statements are based on assumptions that Insight believes to be reasonable, but are subject to a wide range of risks and uncertainties and, therefore, there can be no assurance that actual results may not differ from those expressed or implied by such forward-looking statements. Trends are not guaranteed to continue.