How Quantum Computing Can Solve Real-World Problems

The history of technology can seem like it was very predictable in retrospect, but the future typically feels more uncertain. Nowhere is this uncertainty more evident than in the domain of quantum computing. When the spectrum of possible outcomes spans from “quantum computers will be one of the most important technology developments of all time” to “quantum computing may never really become practical enough to justify using over a classical computer alternative,” trying to make forecasts can seem futile. However, as a technologist, investor, consumer, or just a curious observer, the boundaries and texture of the uncertainty itself can be very interesting, valuable, and informative.

Quantum computing isn’t likely a replacement for today’s classical computing—at least not initially—but rather a potential complement to it for specific applications. A 2021 report by Deloitte lists dozens of applications that could be transformed by quantum computing approaches: Protein folding, fluid simulation, credit underwriting, financial risk analysis, supply chain optimization, and forecasting, vehicle routing, fraud detection, fault analysis, weather forecasting, semiconductor chip design, product-portfolio optimization, consumer product recommendations—and this is only half the list.

Quantum computing could make previously intractable simulation, search and optimization calculations relatively easy and quick. For some kinds of calculations, a quantum computer could be mind-bendingly faster than a powerful classical computer could ever be. While today’s classical computers running machine learning systems can make incredibly accurate predictions, quantum-based systems can, in theory, far outstrip their speed at certain prediction-related tasks.

The blend of physics, math, and computer science behind quantum computers are fascinating but complex. Computer scientist Scott Aaronson, one of the best science communicators, wrote a recent essay for Quanta magazine called, “Why Is Quantum Computing So Hard To Explain?”

To oversimplify, quantum computers are built around “qubits,” which are subatomic-scale components, often kept at incredibly cold temperatures (think: Absolute zero) to minimize interference from the rest of the universe. These components can be put into a quantum physics state called “superposition” that allows them to, in a sense, take on many potential values at once. Even though their value is uncertain, reliable calculations and transformations can be performed on these qubits while in a superposition before being reduced to a more classical state, effectively parallelizing computations. Qubits in a quantum computer can also be entangled with one another (a phenomenon that Einstein referred to as “spooky action at a distance”), harnessing them as a team to increase the variety of logical calculations that it’s possible to compute. For certain types of math—for example, searching for an optimal outcome from myriad options—quantum algorithms can seem close to instantaneous when compared to those that run on classical computing hardware.

Quantum Computing Challenges

Publicly available quantum computing has been on the horizon for decades, yet there’s still no reliable forecast for when products capable of widespread adoption will get released, exactly what form the systems will take or how broad and deep quantum computing’s impact will be. There are many technical challenges when it comes to getting a broadly applicable quantum computer to be useful at scale. And quantum computers are racing against classical computers, which have also been improving very rapidly in power, versatility, and ease of use.

One important bottleneck for quantum computers is that correctly reading the results of a quantum calculation is prone to a very high error rate—the delicate superposition state can deteriorate before the correct result is presented as an output.

Aaronson explains it:

The problem, in a word, is decoherence, which means unwanted interaction between a quantum computer and its environment — nearby electric fields, warm objects, and other things that can record information about the qubits … The only known solution to this problem is quantum error correction… But researchers are only now starting to make such error correction work in the real world. When you read about the latest experiment with 50 or 60 physical qubits, it’s important to understand that the qubits aren’t error-corrected. Until they are, we don’t expect to be able to scale beyond a few hundred qubits.

Some theorists even believe a practical quantum computer is impossible to build because of the inherently high amount of “noise in the system.” Mathematician Gil Kalai predicts that doing error correction to reduce that noise to a usable level would, by basic computing theorems, restrict the computer’s number-crunching capacity so low as to be not worth using.

Nevertheless, researchers continue to chisel away at the challenge of error correction and to pass key technical milestones. For example, in July, Google researchers published a paper in Nature explaining how they have found a promising error-reduction method that scales as more qubits are added to a system.

Hardware, Software, and App Landscape

There are several different approaches to quantum hardware. Rigetti and Oxford Quantum Circuits use superconducting quantum circuits, a more widespread approach favored also by Google, Microsoft, and IBM. IonQ (which is planning to go public via a SPAC transaction) and Honeywell (which recently announced a combination of its quantum computing unit with UK-based Cambridge Quantum Computing) use trapped ions. D-Wave uses quantum annealing, ColdQuanta is using cold atoms and Xanadu is using photonics. Each approach has its pros and cons, and each hardware technology faces a fluid set of shifting bottlenecks in reaching an affordable scale. Work at the materials layer continues to advance as well, for example, Raicol Crystals is growing quantum quasi-phase matching crystals that can be used in applications as varied as quantum computing, sensing, encryption, and communications. And it’s not certain that there won’t be another approach that leapfrogs them in solving the challenges of error correction and price.

Other companies, such as Quantum Machines, Q-CTRL, and Seeqc, are building control hardware that can operate in the space between classical and quantum hardware. Control systems can exist at temperatures warmer than absolute zero, but colder than room temperature. Some of these systems involve both software and hardware and can perform tasks like monitoring the quantum processor’s performance, performing error correction, or serving as an abstraction layer connecting classical and quantum hardware.

There are also companies creating quantum computing application layer software, like Zapata Computing, Riverlane, or QC Ware. This can include application frameworks, components, algorithms, or even turnkey quantum computing applications. Classiq has software for quantum circuit synthesis. There’s also a lot of interesting fundamental research happening at the software intersection between quantum computing and artificial intelligence – e.g. Zapata published work recently using IonQ’s ion trap quantum system to train a machine learning system to generate high-quality handwritten digits with low error rates.

One of the first theoretical uses for quantum computing, proposed in 1994 by mathematician Peter Shor, would be to crack the RSA encryption widely used today to protect data. The threat spawned research into post-quantum cryptography, with researchers proposing multiple new approaches to encryption that would need to replace today’s algorithms to preserve the privacy of data. Companies such as ISARA (quantum-resistant encryption) and Quantum Xchange (an out-of-band, symmetric key delivery platform) emerged to help prepare governments and companies now for a world where quantum-based, encryption-cracking algorithms start to work on breaking today’s encryption.

There are several widely used software development kits (SDKs) that let developers write software for a quantum computer without actually having one to run it on. In fact, a kind of format war has already broken out between quantum computing development environments.

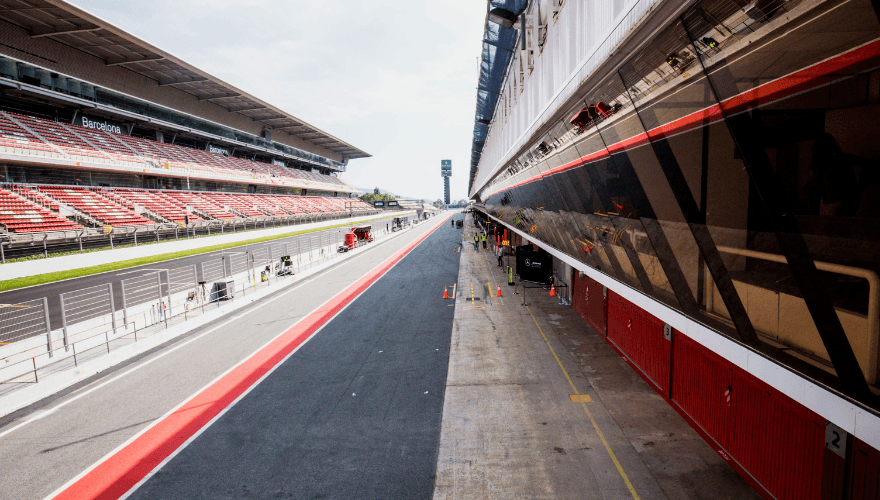

One widely-used quantum SDK is IBM QISKit, an open-source Python-based development kit for prototyping software and devices on simulators, or IBM Quantum Experience, a cloud-based quantum service. ExxonMobil, Goldman Sachs, Boeing, JP Morgan, Samsung, and PayPal are just a few of IBM Quantum’s marquee customers. Google Cirq, another Python-based development kit, touts Volkswagen as a user, and Volkswagen is also a contributor to Google’s TensorFlow Quantum, an open-source library that extends Google’s TensorFlow machine learning algorithms to quantum processors for rapid prototyping. Microsoft’s Quantum Development Kit (QDK) is based on Microsoft’s Q# programming language and brings quantum compatibility to Microsoft Azure cloud services. Early customers of Azure Quantum include Dow for chemical simulations and Toyota Tsusho, Toyota’s trading arm, developing traffic light optimizations to reduce urban traffic congestion.

In a recent piece, The Information noted that “Amazon is looking to hire more than 100 new quantum computing scientists, hardware developers and engineers within its Amazon Web Services cloud business,” following the enormous amount of funding flowing to startups in the space, such as Quantum Motion, Atom Computing, and PsiQuantum. Amazon also launched Amazon Braket, a fully managed quantum computing service that gives customers access to hardware from a diverse set of vendors such as IonQ, Rigetti, and D-Wave.

It’s also possible that the most valuable quantum platforms won’t be versatile, horizontal platforms at all; rather, the space could evolve instead to include special-purpose machines, or quantum application-specific integrated circuits (ASICs). Unlike the way things have played out with classical computing, this could mean that quantum computers would be highly specialized integrated hardware and software machines tailored to specific applications, e.g. fluid simulation or fraud detection.

Early use cases may involve quantum systems taking specialized roles as part of a larger classical system, similar to how NVIDIA’s graphics processing units (GPUs), which often run alongside an advanced CPU, can be used to offload specific computations for which the GPU is well-suited. In this model, where quantum components are an “accelerator” to classical systems, a credit underwriter might use a quantum processor to handle one of the tasks involved in scoring applicants and might use a different special-purpose quantum processor to perform a piece of a calculation to optimize its borrower portfolio.

For many use cases, the error rates of calculations are still too high for developers to do more than hone their coding chops on quantum simulation platforms, waiting until quantum computers start to really work at scale. Quantum simulators, such as QMware, make quantum software algorithms and applications believe they are running on actual quantum hardware, but they are really running on classical computers pretending to be quantum computers.

In the meantime, to manage some of the timing uncertainty, quantum-related startups can pursue profitable projects with government, corporate, and university buyers today as a source of funding and requirements. Like some biotech startups, they can do this while assembling a strong team and seeking an early adopter clientele, progressing through scientific milestones while keeping an eye on future commercial markets.

The hurdles to generally available quantum computers are much like those faced by alternative energy sources: Unlocking the potential will require breakthroughs in hardware design, lower manufacturing costs, and a driving force to spur large-scale adoption. And many approaches won’t work.

And as with any technology, it is, of course, possible that software developers will find valuable applications for quantum processors that people haven’t thought of yet today—just as developers found that GPUs meant to handle graphics processing also excel at training highly parallelized machine learning models.

That said, a broadly useful and effective quantum computer’s arrival has the potential to be as disruptive an opportunity as the creation of the Internet or the use of machine learning to make predictions.