Managing Cloud-Based Gross Margins While Scaling Operations

In every born-digital SaaS ScaleUp, cloud computing costs can grow to be the single largest expense item, besides people. Whether cloud costs are shown on the gross margin line - or below the line in R&D spend - at some point, companies need strategies to curb the runaway spend.

ScaleUps are discovering that cost optimization opportunities include not only smarter spending to reduce the overall cloud-provider bill, but also extend to making use of tools and technologies to improve upon the core services they offer to customers. Assessing and reducing costs is just the starting point because using cloud tools and processes can also improve the end-user experience at scale.

Three examples below show how Insight Partners portfolio ScaleUps are applying cost-optimization planning and technology adoption to successfully scale operations and improve the end-user experience.

Starting point

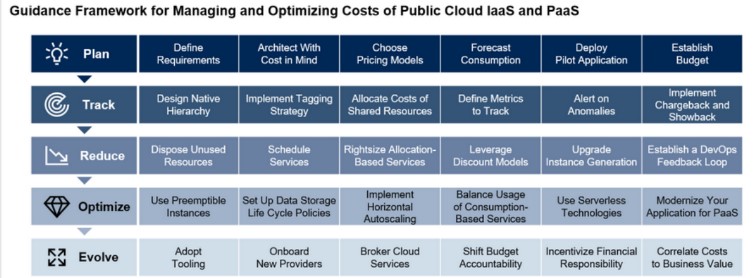

As a jumping off point, Gartner offers a framework for improved management of infrastructure as a service (IaaS) and platform as a service (PaaS). With it, organizations can benefit from a more intelligent and efficient consumption of cloud services, while improving services for the ScaleUp’s customers. The framework is equally applicable to SaaS and other cloud-based application and service providers.

The five-step framework that Gartner describes consists of:

Step 1/ Plan: Define requirements and forecast consumption to inform budgets and baseline pricing to compare against real cloud costs once the bill is received.

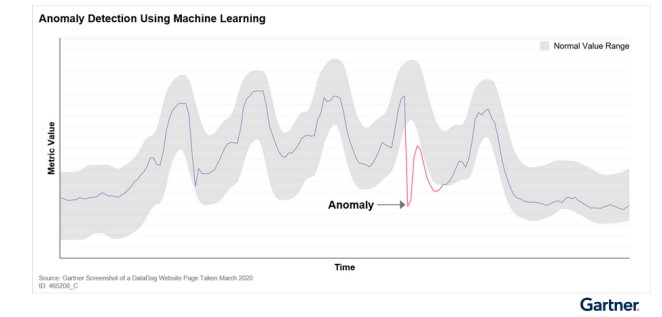

Step 2/ Track: Similar to tracking application performance, ScaleUps can implement ways to track, monitor and observe their cloud spending in detail. Observability tools can play a huge role in the automation of tracing and monitoring cloud costs, in addition to application and services performance. Technologies such as machine learning can be used to help automate the process of detecting unforeseen and unexpected jumps in cloud storage or compute usage.

Step 3/ Reduce: ScaleUps can begin reducing their monthly bills once they have proper visibility into their cloud consumption and associated pricing.

Step 4/ Optimize: ScaleUps are going beyond saving cloud costs — they are optimizing their resources and adopting technologies to more efficiently deliver and improve upon the end-user experience.

Step 5/ Evolve: Eventually the goal is to create an organizational shift to improve cost across the company, for both hosting client-facing product and company applications.

Gartner's framework is highly useful in optimally benefitting from public cloud platforms such as AWS, Azure, and Google Cloud. It involves continuously making the right choices in terms of which infrastructure services to use, how to size and scale this infrastructure, and how to prevent underutilization and zombie resources, Torsten Volk, managing research director for Enterprise Management Associates (EMA), said. “Understanding your organization’s application requirements is key when it comes to solving this riddle, as most organizations are unable to optimally predict an application’s resource requirements,” Volk said. “Without a clear understanding of how application requirements map to what a public cloud has to offer, application teams can err toward overprovisioning to be on the safe side.”

Insight's portfolio companies are deploying strategies to reduce spend, while simultaneously improving performance and customer experience. Three examples are described below.

Choosing CPUs for performance

Newer ScaleUps, typically, are not overly concerned with running servers or managing data centers. After all, cloud providers and the technologies they offer have often enabled ScaleUps to more efficiently provide SaaS or even direct-to-consumer services much more cheaply than their less-nimble competitors that can have onerous legacy equipment and systems to manage on-premises.

However — lest we forget — the computing performance of services that cloud vendors offer still remains very much tied to the decades-old CPU and memory configurations. The right CPU choices on cloud servers — often called “bare metal” when the a company pays for computing on the hardware it selects — can translate into improved computing performance by offering lower latency and increased throughput (with direct cost benefits). This can translate into improved performance for a SaaS service — delivered at a lower cost.

An excellent case in point about how the choice of CPUs on cloud provider servers can offer was described by Liz Fong Jones, principal developer advocate of Insight's portfolio company Honeycomb. Jones offered details during a presentation at the recent AWS Summit New York 2022.

Customers might use Honeycomb to debug or discover issues with their systems or applications involving the analysis of billions of lines of code. Three years ago, Honeycomb’s platform was ingesting about 200,000 trace spans per second. Today, those trace spans have skyrocketed to 2.5 million trace bits per second. “Our customers are asking 10 times as many questions about 10 times as much data. So, how did we scale our services economically?” Jones said. “And did I mention we only have 50 engineers?”

For Honeycomb’s observability ingest service, Honeycomb saw a 10% improvement in the median latency, with significantly fewer latency spikes, when shifting adoption from fifth-generation Amazon Elastic Container Service (ECS) to AWS’s Gaviton2 CPU on ECS. When Honeycomb performed tests with Graviton2 versus Graviton3 with ECS, the company found a further 10% to 20% improvement in tail latency, and a 30% improvement in throughput and median latency of its ingest services with ECS, Jones said. The end result is that Graviton upgrades have translated into significant cost savings as Honeycomb improved its platform’s computational and latency capabilities at scale, Jones said.

What this means for Honeycomb users is that a customer query about a complex system or application can be answered in seconds instead of minutes. “What do I mean by as quickly as possible? I mean you should be able to answer any question in less than 10 seconds,” Jones said. “You don't get distracted by going to get a cup of coffee.”

Better performance for observability tracing is but one example of how a CPU upgrade can offer improvements that users notice. Improved process performance can significantly accelerate machine learning workloads, video encoding, batch processing, analytics, and other use cases that require massively parallel processing, built-in encryption and the ability to connect vast amounts of RAM to CPU cores, Volk said. “The more tightly an organization is able to pack their application workloads onto Graviton on ECS, for instance, the more cost savings it will harvest while at the same time benefiting from completely consistent compute performance,” Volk said.

Improving automation for backup & DR

Improving the automation of backups and disaster-recovery protection (including ransomware protection) from the cloud can also reduce cloud costs while improving application performance for customers. The ROI of the ability to mitigate the effects on the user experience of a ransomware attack or to restore data in the event of disaster is obviously very high indeed (needless to say, the surge in ransomware attacks pose existential threats to the entire business).

Less urgent than ransomware and disaster-recovery protection — yet important — is the selection of the right cloud service and tools for storage. As simple testing will show, differences in latency, capability and reliability can vary among cloud vendors, with a direct impact on performance for end-users. The choice of the proper system is also critical for the optimization of cloud costs.

Kasten Gaurav Rishi, VP of Product for the Insight portfolio company Veeam believes that a backup and DR system must be extremely simple to use in a cloud-native environment, while allowing the choice of underlying infrastructure and deployment architectures across clusters, regions, and even clouds. This freedom of choice enables enterprises to not only select the optimal compute and storage platform for their growing footprint of applications but also continuously optimize their costs by rehydrating applications along with the data to other environments.

“Manually scripting and configuring backups and disaster-recovery processes and then managing these dynamically changing variables on an ongoing basis wastes valuable resources for organizations,” Rishi said. “Automating the process — with the right tools — not only optimizes human resources and cloud costs, but is instrumental in reaching scaling goals.”

Volk agreed that cloud backups and disaster protection can lead to cost savings when implemented in an efficient manner. “Too many organizations lack the ability to optimally automate these traditional enterprise IT capabilities for optimal cloud use, leaving them with unpredictable RTOs and RPOs,” Volk said.

In addition to upgrading the CPUs on which Honeycomb’s platform runs on AWS’ cloud, Honeycomb has sought to improve its storage performance for better latency for its users’ data retrieval needs. AWS’ Lambda serverless platform is used to support Honeycomb’s customers’ queries on demand, involving “millions of files” from S3 — AWS’ storage platform — Jones said. Honeycomb’s use of Lambda and S3 and Graviton upgrades has translated into a 40% improvement in price performance. The technology adoption was also instrumental in providing results for customers' queries “in less than 10 seconds,” Jones said.

Reducing overprovisioning

Since cloud services are priced by seat rather than by hour of usage, they spend much of their time idling. While this cannot be avoided completely, automated processes allow departments to be more diligent in removing resources that are no longer in use.

Indeed, the topic of paying for idle resources is “as old as the public cloud,” Volk said. “Organizations are still struggling with automating provisioning and decommissioning workflows in a complete and consistent manner,” Volk said.

Close monitoring of cloud usage, redundancy, and pricing as described above in the Gartner framework serves as the starting point to pinpoint over-capacity pain points in operations. If left unheeded, these paint points can translate into significant wasted spending.

However, some organizations allow for over-provisioning to occur, as it can — in theory — help to make SaaS and other services more resilient against downtime. Organizations also want to be ready to handle cases where load suddenly spikes during peak usage loads by having spare cloud-server capacity at their disposal.

Overcapacity can particularly represent a problem for those organizations deploying or managing applications and data in cloud-native environments on Kubernetes or relying on virtual machines for operations, notes Matt Butcher, CEO at Insight portfolio company Fermyon Technologies. “Whether infra ops folks are using virtual machines or container-clustering technologies like Kubernetes, the accepted design pattern is an expensive one,” Butcher said. “‘The strategy for running services relies upon overprovisioning. Overprovisioning occurs when you provision more cloud resources than you require under normal load.”

In practice, overprovisioning means running multiple copies of the same application, and running them continually, even when many or all of them are sitting idle. For example, a small microservice may be packaged in a single container. “In this case when deployed to Kubernetes or a similar system, the prevailing wisdom is (for the sake of availability and handling spikes in load) to provision at least three copies of the container,” Butcher said. “That means three copies are running all the time. Assume for a moment that average load can be handled by one instance. Each of these constantly consumes memory, CPU, storage, and bandwidth.”

An open source alternative can help avoid over-capacity, Butcher said. The use of WebAssembly, which provides direct-to-CPU binary instruction sets to VMs, has been shown to help solve some over-capacity issues. A WebAssembly application, for example, can start in less than a millisecond for some server instances, Butcher said. “That means it is cheap and easy to start them up and shut them down,” Butcher said. “On the server side (thanks to ahead-of-time compiling and caching), rather than running long-running stateless services (as is popular in the container ecosystem), a platform can shut down anything not actively handling a request, and then start it up again on demand.”

What does all of this mean in terms of lowering cloud costs? Effectively, a WebAssembly-based platform can run more modules (over 1,000, according to some benchmarks) on an AWS large 32G RAM ($72 per month) than can be achieved using containers (724 containers, costing $849 per month or $1,900 per month), Butcher said. “And because the platform can under provision so effectively, they can run smaller clusters with fewer nodes in addition to allowing end users to run smaller virtual machines and achieve higher density,” Butcher said.

The final matter

The above examples show how three Insight Partners portfolio ScaleUps are applying cost-optimization planning and technology adoption to successfully scale operations to improve the end-user experience. Many more examples exist under Insight Partners’ portfolio umbrella. They illustrate how cloud environments are under particular scrutiny by ScaleUps as major cost centers as CxOs also realize how cloud resources remain a principal conduit for growth and scaling. The cost-optimization opportunities include not only smarter spending to reduce the overall cloud-provider bill, but extend to emerging tools and technologies.

The opportunities cloud resources have offered SaaS companies from the outset remains: numerous infrastructure, capacity and other services are often available at a fraction of the cost compared to those competitors running traditional data centers and legacy equipment. At the same time, ScaleUps are discovering new technologies to distance themselves even further from the competition by using the cloud to deliver improved services in a cost-optimized way.

Along your journey to optimize cloud operations and their associated costs to meet customers’ needs at scale, Insight Partners is here to support your organization and partner with you in making the best technology choices.