Scroll down to see the full market map.

Adopting generative AI is no longer optional — it’s becoming essential to stay competitive. As usage surges across the enterprise, security teams are under pressure to keep pace.

CISOs are already grappling with an 890% increase in genAI-related traffic through 2024. LLM-powered applications are surging, and we’re sprinting head-first into widespread deployment of AI agents. The security stack is struggling to keep up.

With billions in enterprise value at risk, we believe the overall winners here will be those who can move quickly enough to securely enable business adoption of genAI.

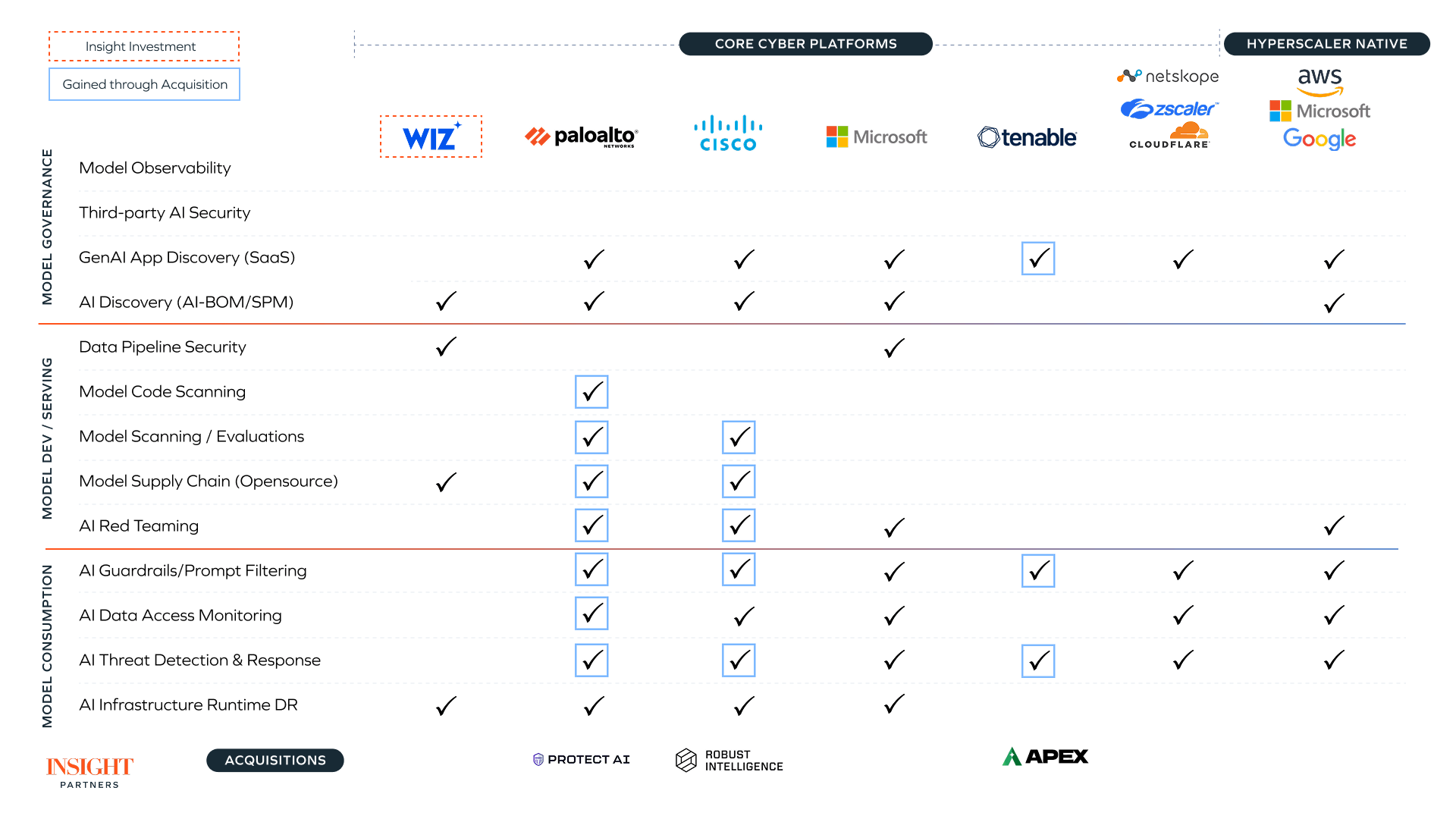

But where should CISOs be looking for those solutions? New technology brings with it novel threats and a need for innovation. GenAI is no different, and we’ve seen an influx of new AI security startups alongside incumbents building or buying AI security capabilities to enhance their platform offerings.

This fragmented and noisy landscape raises questions for enterprises, founders, and investors alike about where they should allocate their time and resources in deploying or delivering the next generation of security products.

At Insight Partners, we believe AI security is a foundational pillar of the modern enterprise stack — and one of the most urgent opportunities in infrastructure today.

As genAI adoption accelerates, startups that enable safe deployment through runtime protection and secure model development are well-positioned to lead. In the near term, we see strong momentum in AI firewall capabilities; longer term, the biggest opportunities lie in securing AI agents and the broader software supply chain.

This is the first in a series exploring how AI is reshaping cybersecurity and the opportunities for innovation in this rapidly evolving technology domain. You can follow Insight Partners on LinkedIn to be sure you don’t miss future articles and discussion.

Enterprises are deploying AI fast, and CISOs are bracing for impact

We speak with hundreds of enterprise CISOs and CDAOs every year, and while genAI is being adopted carefully, the pace is accelerating. Internal productivity use cases, leveraging tools like Microsoft Copilot, GitHub Copilot, and Enterprise ChatGPT, dominate. Where there is clear ROI, some enterprises have deployed AI-enabled services for external use cases, for example, deploying chatbots and agentic workflows for customer engagement and sales support.

Most teams are still using out-of-the-box models like OpenAI or Anthropic, usually with basic RAG to tap into internal data. A few are going deeper — fine-tuning or customizing models — but they’re the minority. For now, concerns around security, compliance, and unclear ROI are keeping most companies from going further.

As one CISO describes it:

“Right now, we’re just using Microsoft Copilot and foundational models in Bedrock… It’s all internal use cases… we don’t have any requirement today for internet-facing AI models, and before we do that, we’ll need to put something in place, the same as we do with WAFs for applications.”

The primary concern is with model output, specifically excessive data exposure and the potential for reputational damage or even regulatory repercussions resulting from model responses. And that’s where we’re seeing immediate interest from the enterprise — in guardrails, prompt filtering, and red teaming:

“We have AI-enabled products already, and so we’ve built a security layer in front of the genAI tools we’re using…We’re really concerned about the access to data.”

What’s more, CISOs have been watching the pendulum shift on increasing business adoption and evaluating AI security capabilities, ready to ‘push the button’ when they need to deploy something more substantial:

“We’re not in a position to buy yet, but we’re actively evaluating solutions…There will be a need to buy something, and I want to be ready.”

As genAI adoption matures, we believe demand is pivoting toward a greater need for security through the development lifecycle and open-source security capabilities, but we’re not there yet.

As established players scramble to build or acquire these capabilities, their ability to move quickly will determine whether security leaders stick with them or turn to more agile, specialized AI security vendors:

“I don’t want to have to default to Palo or Microsoft for solving all our problems…We’re looking at AI security vendors to provide both diversity and coverage.”

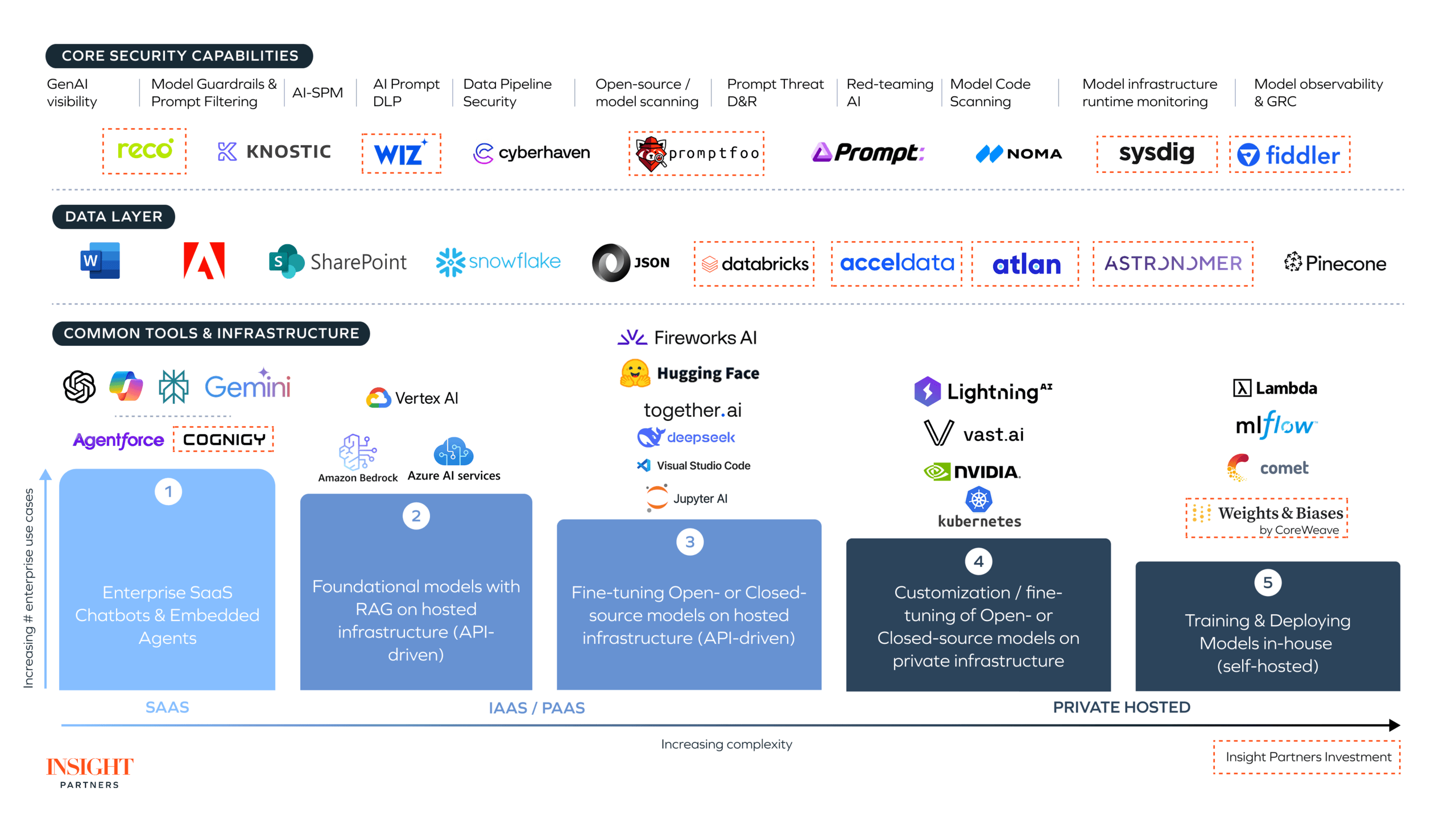

These adoption stages are shown below, along with examples of applications, infrastructure, data, and security solutions.

The two frontiers of AI security: Development and runtime

As companies move from experimenting with AI to deploying it in production, security concerns are quickly becoming more defined — and more urgent. Two clear categories of AI security are emerging.

The first is model development security, which focuses on ensuring models and applications are secure by design. This includes practices like red teaming, model scanning, and maintaining hygiene around fine-tuning. It’s also reshaping roles within tech teams with the rise of the AI engineer, a role that blends data science, infrastructure, and security expertise.

The second category is model runtime security, which centers on safeguarding AI systems in production. Here, techniques like prompt filtering, data loss prevention (DLP), and defenses against model-specific threats come into play. This space heavily relies on traditional SecOps and infrastructure playbooks, which have been adapted for a new class of workloads driven by generative models.

Incumbents have predictably focused on runtime. It’s an easier wedge, extending proxies and network visibility into LLM traffic. However, enterprises aren’t yet convinced of their ability to deliver. Startups and ScaleUps can fill the trust and functionality gap that exists between legacy security platforms and the new challenges introduced by genAI systems — particularly in areas where traditional approaches fall short.

And what about security through development? Enterprises typically don’t rely on their platform vendors to deliver AppSec, and we expect the same for secure AI app development. We believe the opportunity here lies in startups winning over cybersecurity platforms, with a few pivots from incumbent Application Security (AppSec) vendors.

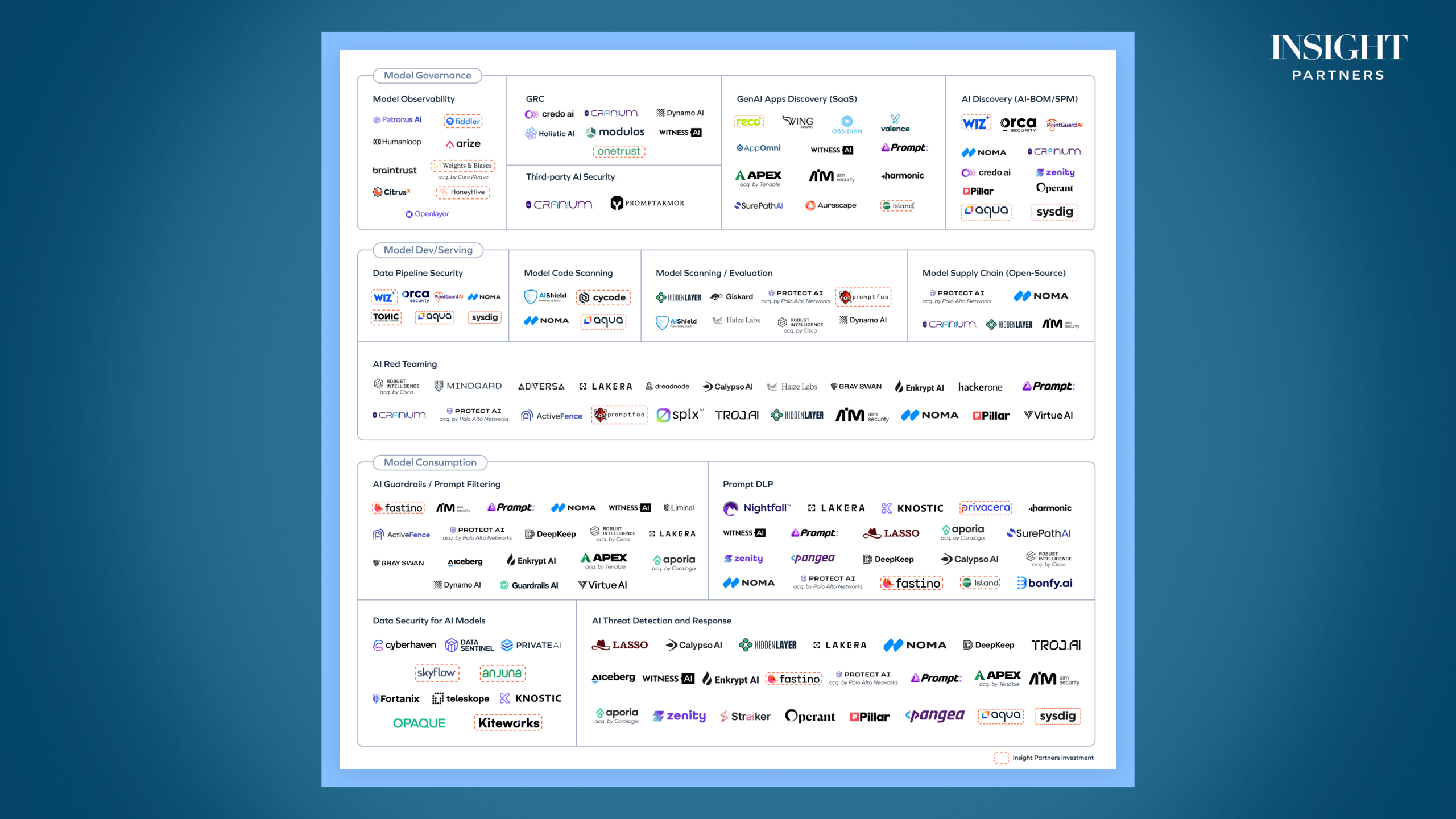

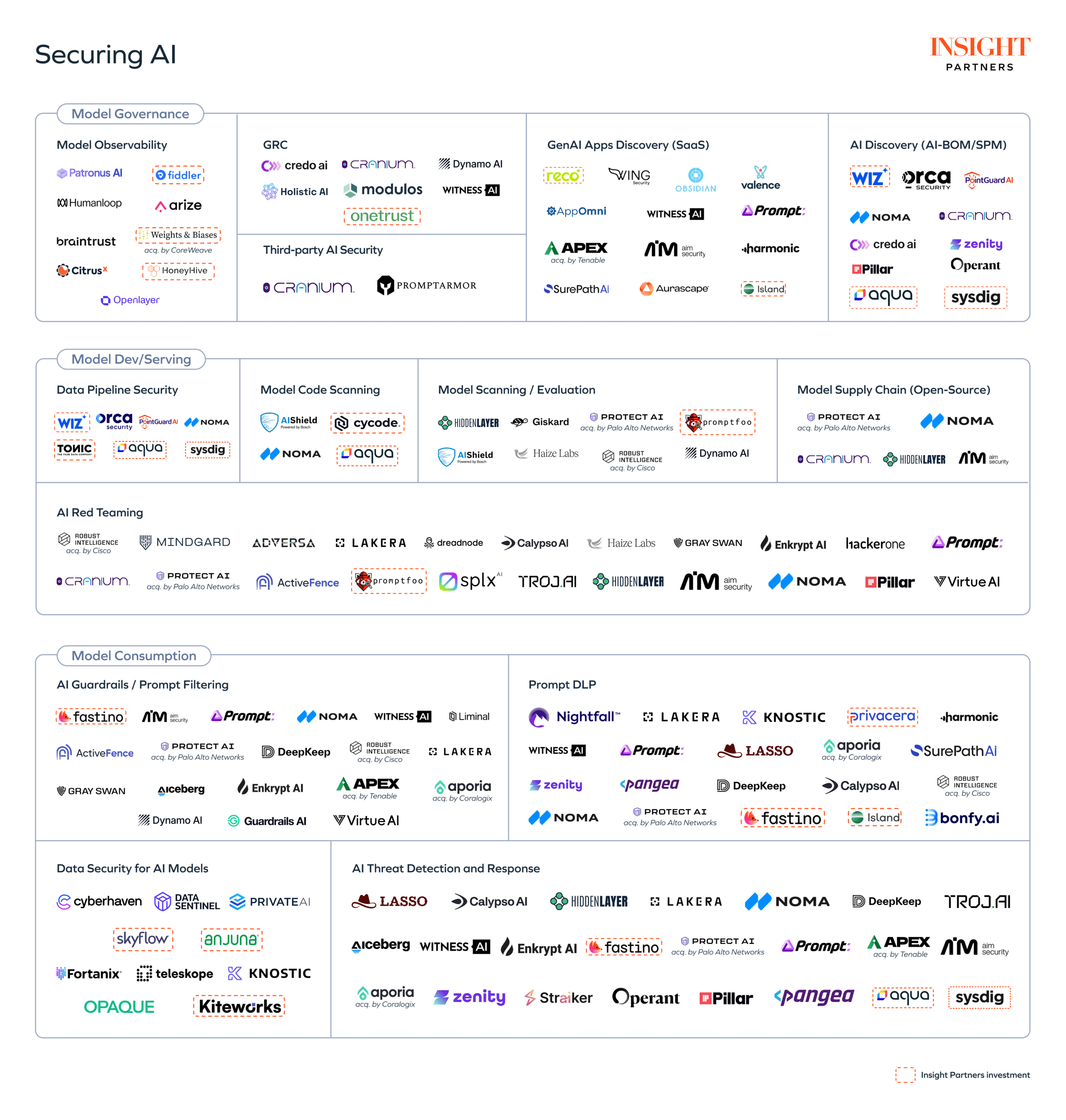

Which brings us to the startup ecosystem, as seen in our market map below.

The space around prompt filtering, AI threat detection and response (that is, detecting and blocking malicious prompts), and AI red teaming capabilities has become extremely crowded — and it’s ripe for consolidation, with major acquisitions in these categories already.

Fewer startups have entered the model development security space. There isn’t as great a demand from the enterprise here today, but we see this as an area of high growth as the focus shifts towards building and deploying AI apps and AI agents. And with Python fast becoming the backbone of enterprise AI development, the experience around securing the AI software supply chain has massive untapped potential.

The endgame for AI security

Enterprises are still in the early stages of genAI adoption, but this space is moving incredibly fast. Without immediate action, security will become a costly afterthought.

In the short term, AI firewall capabilities — specifically, guardrails, prompt filtering, and AI threat detection and response — will capture buyer attention. Startups and ScaleUps are winning here today because incumbents have been too slow to develop the capabilities needed to truly dominate the market. But we think significant consolidation could happen here.

Longer term, the gravity shifts. Secure model development, AI software supply chain controls, secure agent behavior — this is where the next big opportunity arises. GenAI is expanding its presence in enterprise infrastructure, and security must be integrated with it.

The outcomes here hinge on the trends in enterprise consumption of genAI continuing. AI agents are the next wave of innovation that are already changing the way enterprises build and deploy AI services, and require a complex security model — we’ll be exploring this topic in our next article.

This is a fast-moving target, and we’re always keen to hear from those who are at the forefront of driving secure adoption of AI. Reach out if you want to continue the conversation with our team:

George Mathew

Hunter Korn

Ash Tutika

William Blackwell

Editor’s note: Insight Partners has invested in Aqua, Wiz, Reco, Cognigy, Databricks, Acceldata, Atlan, Astronomer, Sysdig, Fiddler, HoneyHive, Island, Tonic.ai, Fastino, Privacera, Anjuna, Skyflow, Cycode, Promtfoo, Kiteworks, OneTrust, and Weights & Biases (acquired by CoreWeave). Quotes in this article have been sourced from industry conversations and anonymized with permission.