Building a moat in the age of AI

As investors, we’ve been asking founders the same question for decades: What’s your moat? In the age of AI, that question is more urgent and complex.

Many of the barriers to building software have collapsed. No-code platforms and coding copilots have dramatically shortened the path from idea to product. Foundational models have given builders superpowers — off-the-shelf intelligence that used to take years (and millions of dollars) to create. The “app layer” is increasingly capable, without needing to build its own foundation. Building has become plug and play.

But the same technology that accelerates innovation can also compress differentiation. The most ambitious founders we meet are wrestling with this paradox daily: If anyone can build quickly, how do founders build something that lasts?

The challenge has intensified as foundational model providers like OpenAI*, Anthropic*, Google, and others that have begun moving up the stack into the application layer. OpenAI’s release of AgentKit sparked anxiety across the startup ecosystem: Did this kill the entire category of AI Agent builders overnight? Similar questions echo across many verticals: If you’re building for lawyers, insurers, bankers, or accountants, how long before the foundation models do it themselves?

As investors, we’re being forced to evolve just as quickly. This isn’t the gradual shift from on-prem to cloud — it’s a rapid cycle of adoption, saturation, and redefinition. First-mover advantage is no longer enough. The fastest-growing software companies of the last decade now represent “table stakes” growth. We need new frameworks to evaluate what a durable advantage looks like in this AI-first world.

And for founders, that question is existential. To get ahead, you need strong teams with differentiated, deep technical expertise, lightning-fast iteration cycles, and a deep understanding of their customer base. To stay ahead, you need to reevaluate constantly: How do you build a moat in the age of AI?

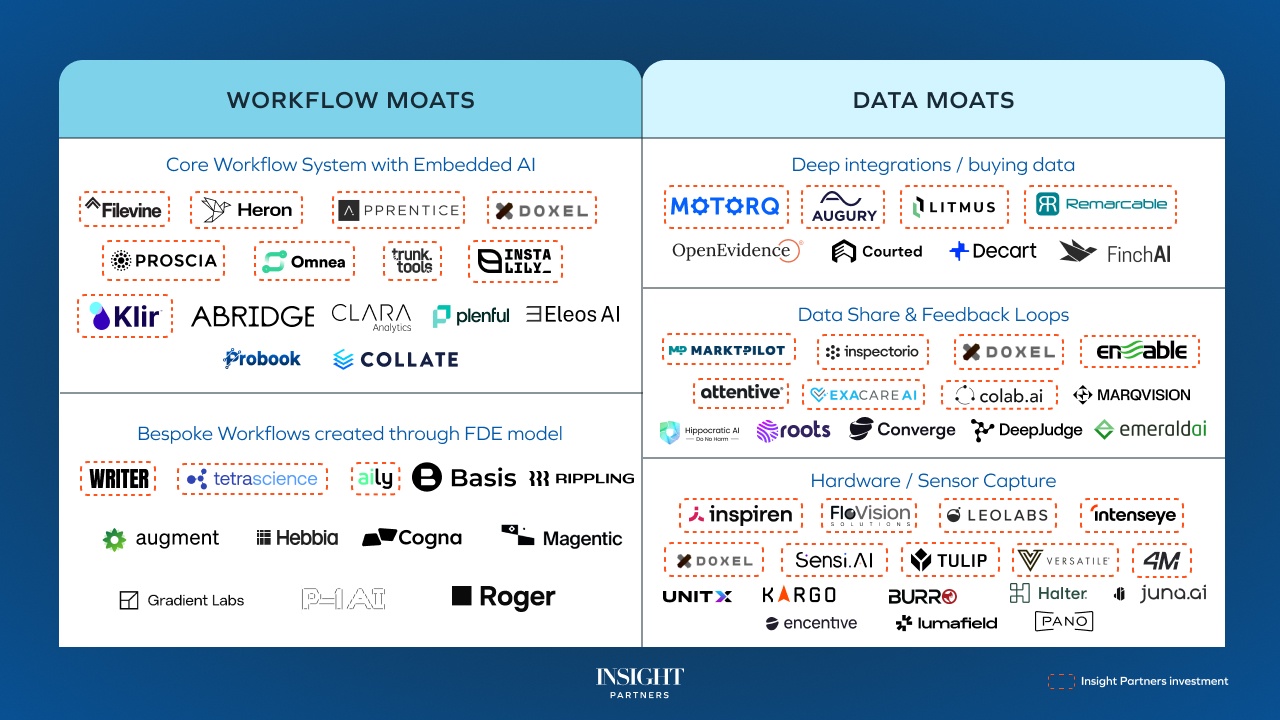

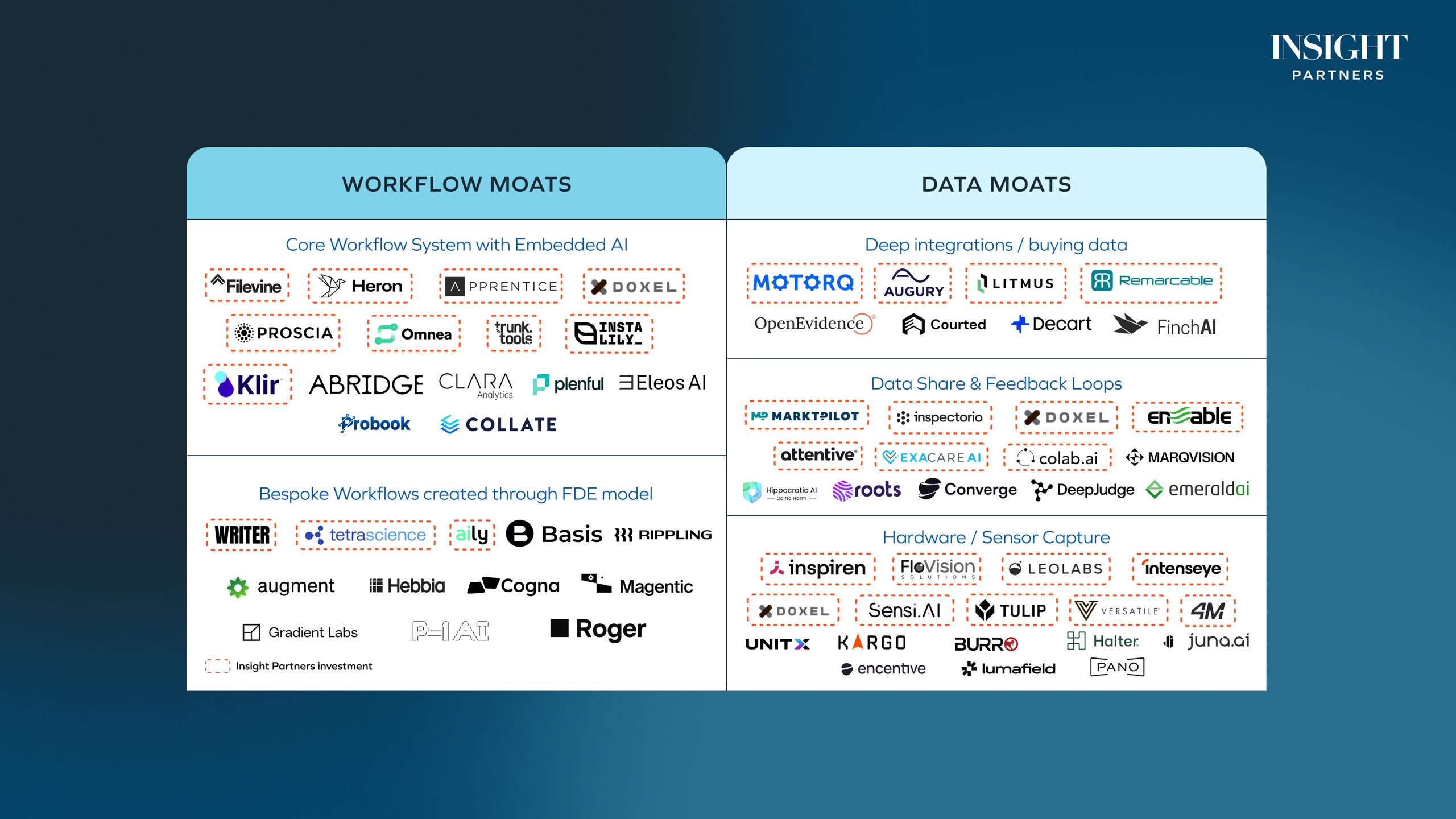

The North Stars for vertical software in the AI era

We see two major categories where vertical AI companies can build real moats: workflow depth and proprietary data. Whether through core workflow systems with AI deeply embedded, forward-deployed engineering, custom integrations, or novel data capture, these are the edges that create compounding advantages.

Owning the workflow: Core workflow systems with embedded AI and the FDE model

A chat window fine-tuned by prompt engineers and industry experts might get you early adoption, but not defensibility. The companies that will endure are the ones that build systems of record and engagement — platforms their users live in all day, where work is created, shared, and stored.

The companies that will endure are the ones that build systems of record and engagement.

Filevine* is a powerful example. Over the past decade, Filevine has become a leading operating system for legal work — combining CRM, case management, time tracking, and billing into a single platform. When AI arrived, Filevine didn’t bolt it on; it extended its core system. Now, lawyers can draft demand letters, summarize depositions, or chat with their cases — all within the same trusted environment where their data already lives. This allows for tight alignment of the user interface to the user, where Filevine meets its customers where they already work.

We’re seeing a resurgence of the forward-deployed engineering (FDE) model, where teams work hand-in-hand with customers.

For newer companies, embedding deeply doesn’t have to mean decade-long buildouts. We’re seeing a resurgence of the forward-deployed engineering (FDE) model, popularized by Palantir, where teams work hand-in-hand with customers to map bespoke workflows and automate them quickly. Today, AI can amplify this approach: Systems can observe user behavior, suggest optimizations, and even generate custom automations in near real time.

Historically, investors were wary of heavy implementation cycles. They slowed time to value, and bespoke solutions limited scalability as the customer base grew. Implementation has always driven stickiness, but in the AI era, faster setup and adaptive configuration allow companies to deliver tailored solutions without sacrificing the scalability of software.

Basis takes this approach in accounting. They set up “boots on the ground” to work side by side with practitioners to understand firm policies and processes and best configure the platform to reflect how they want to work. Basis deploys an agentic platform that learns each firm’s workflows and takes on real end-to-end accounting work without the overhead or slowness of writing custom code.

These hands-on deployments become a moat in themselves. Each implementation surfaces new inputs and data patterns that feed back to the platform engineering and ML teams, compounding into faster time to value for new customers and unlocking richer workflows for existing ones. And WIQ*, building copilots for forward-deployed engineers, shows how even the process of embedding can itself become a product wedge.

These companies don’t just offer new AI tools; rather, they embed AI where the work happens.

These companies don’t just offer new AI tools; rather, they embed AI where the work happens. Workflow depth becomes even more defensible when you create specialized workflows and embed them directly into existing enterprise systems. Instead of using disparate systems, the work gets automated piece by piece over time within your existing workflow and systems.

Owning the data: Integrations, customer data, feedback loops, and hardware capture

If workflow depth gives you stickiness, proprietary data gives you a compounding advantage. Access to specific, and often messy and unstandardized data, remains one of the strongest moats in AI. Foundation models can do powerful things with public data, but they don’t have every bespoke integration, private customer data, or edge device feed.

Access to specific, and often messy and unstandardized data, remains one of the strongest moats in AI.

Buying or integrating with specific, hard-to-access datasets provides even more of a moat in the age of AI than it used to, because that data can now be used for training, multiplying its impact. Consider Motorq*, which has spent years building direct integrations with major auto original equipment manufacturer (OEM). That effort gave them access to real-time vehicle data that few can replicate. Today, they offer fleet analytics and predictive maintenance tools that surpass generic solutions.

Earned data access creates a moat that widens with every customer onboarded.

Another pattern is earned data access, where customers willingly share data in exchange for value. This can come in two forms: customer data stored on the platform that can be used for the reference and context layer, and feedback loops. On the former, Markt Pilot* aggregates pricing data for industrial spare parts across customers, building a specific corpus of market information. That dataset can be used to power predictive pricing recommendations, creating a moat that widens with every customer onboarded.

Remarcable* in construction procurement has a data advantage through integrations with the long tail of mechanical, electrical, and plumbing (MEP) suppliers and distributors, while also collecting proprietary information on buying patterns, payment terms, and volume, to improve demand forecasting.

The second form, while still early, is moats that emerge from continuous learning loops, where customer data, user feedback, and network effects improve output performance over time. Each interaction, correction, or decision feeds the inference layer, creating a compounding advantage. In some markets, this advantage is amplified by first-mover effects, particularly in specialized verticals with rich but underutilized data.

Customer data, user feedback, and network effects improve output performance over time.

Take ExaCare*, which serves skilled nursing facilities for admissions: Every AI-generated recommendation requires a human to approve or decline it, creating a reinforcement loop that refines the system’s accuracy. It’s still early to know how fast these loops will evolve — or how easily new entrants can build comparable context layers — but early movers who establish these feedback flywheels are building moats that deepen with every customer and every interaction.

Even hardware is reemerging as a critical moat enabler as edge devices and sensors capture data that others can’t. Inspiren*, which sells AI-enabled sensors to senior living facilities, can monitor resident safety, care activity, and staff efficiency. Because their sensors see what others can’t (and are privacy-protected), their data capture provides visibility to an industry that previously lacked real data to train with.

Hardware is reemerging as a critical moat enabler as edge devices and sensors capture data that others can’t.

The potential data advantage of vertical markets reframes how we can think about building on top of foundation models. Instead of training bigger LLMs, which will have their place in the world, there will also be room for smaller, vertical-specific model layers, trained on bespoke data.

Is OpenAI eating the world?

Yes — and no.

Yes, foundational models are moving fast and capturing value. They’re building integrated ecosystems where users spend more time and money. But that doesn’t spell the end for vertical AI. In fact, it clarifies where opportunity still lies — in building workflow ownership, data ownership, or both.

The next generation of vertical AI companies won’t win by competing against OpenAI; they’ll win by building on top of it, embedding into the workflows, data, and trust layers that OpenAI can’t reach. They’ll have their own customer relationships, their own proprietary data, and their own reasons to exist.

We believe these companies will define the next decade of software. Faster to market, deeply integrated, and defensible not because they built the biggest model, but because they built the most human one: the one that truly understands how their customers work.

*Note: Insight has invested in Filevine, Motorq, ExaCare, Inspiren, Remarcable, Markt Pilot, WIQ, OpenAI, HeronAI, Apprentice, Doxel, Proscia, Omnea, Trunk Tools, Instalily, Klir, Writer, TetraScience, Aily, Augury, Litmus, Inspectorio, Enable AI, Attentive, Colab.ai, FloVision, LeoLabs, Intenseye, Sensi.AI, Tulip, Versatile, 4M, Anthropic, and OpenAI. For a complete list of Insight portfolio companies, please visit insightpartners.com/portfolio.